In this lesson we are going to go on a bit of a tangent and take care of some housekeeping duties to accommodate our growing code base. Until now, we have been writing all the code in a single file, and compiling by invoking gcc on the command line. Clearly neither of the are scalable nor feasible solutions for for a full embedded development project. We need to separate our code into logical modules, create header files will APIs to interface with them and introduce a new tool that will help us maintain our build system and environment. This tool is called ‘make’. Make is GNU utility which is the de-facto standard in the open source community for managing build environments. Most IDEs (notably Eclipse and its derivatives) use make or a form a make to manage the build. To the user its looks like a series of files in a folder which get compiled when you press build, but under the hood a series of scripts are being invoked by make which does all the real work. Make does several things which we will look at in this tutorial. First, it allows you to defined compiles rules, so instead of invoking gcc from the command line manually, you can script it so it passes in the files and the compiler options automatically. It allows you to better organize your code into directories, for example, one directory for source files, one for header files and another one for the build output. And finally, it can be used to track dependencies between files – in other words, not all files need to be recompiled every time and this tool will determine which files need to be compiled with the help of some rules we will add to the script. Make is an extremely powerful tool, so we will just scratch the surface in this tutorial to get us started. But before we jump into make, we must start by cleaning up our code.

Reorganizing the code

As you know, all the code we have written is currently in one file, main.c. For such a small project, this is possibly acceptable. However for any real project functions should be divided into modules with well defined APIs in header files. Also, we do not want to have a flat directory structure so we must organize the code into directories. The directory structure we will start with is going to be simple yet expandable. We will create three directories:

- src: where all the source files go

- include: where all the header files go

- build: where all the output files go

Create the new directories now.

cd ~/msp430_launchpad mkdir src mkdir include

The build directory will actually be generated by the build automatically, so we don’t have to create it manually. You rarely check in the built objects or binary files into the SCM (git) so the build directory should be added to the .gitignore file. Open the .gitignore file (a period in front of a filename means it is hidden in Linux – you can see it with the command ls -al) and on the next line after ‘*.out’ add ‘build/’, save and close. You will not see the new directories under git status until there is a file in them as git ignores empty directories. Move main.c into the src/ directory.

mv main.c src

Open main.c in your editor and lets take a look at how we can separate this file into modules. The main function is like your application, think of it as your project specific code. Start by asking, what does this file need to do? What tasks does it perform? Let break this down, the main program needs:

- enable / disable / pet the watchdog

- verify the calibration data

- set up the clocks

- initialize the pins

- perform the infinite loop which is the body of the application

To enable / disable / pet the watchdog, does the main program simply need to invoke the functions that we wrote, or does it make sense that it has knowledge of the watchdog implementation. Does it need to know anything about the watchdog control register and what their functionality is? No, not at all, it simply needs to be able to invoke those functions. From the perspective of the application the watchdog functions could be stubs. That would be a pretty useless watchdog, but it would satisfy the requirements of main. The implementation of the watchdog is irrelevant.

Verifying the calibration data is another example of code that the main application is not required to know about. In fact, it is safe to say that the only piece of code which relies on this check is setting up the clock module. Speaking of which, does the application need to know how the clocks are set up? Not really. Maybe it will need to know the speed of the clocks in order to configure some peripherals, but not the actual implementation of the DCO configuration. Those are board specific, not application specific.

Finally the pin configuration. The application does rely on the pins being configured correctly in order to read and write to them, but the pin muxing needs to be done only once and again, depends on the board. The application could choose to use them or not. Therefore the pin muxing could be considered part of the board initialization. Hopefully you see where we are going with this. We are trying to categorize certain functionalities so that we can create reusable modules. It isn’t always so straight cut and clear, and often takes experience and many iterations to figure out what works, but when done properly, the code will be much more maintainable and portable. In our case we have defined the following modules:

- board initialization

- clock module initialization

- pin muxing / configuration

- watchdog

- TLV configuration data

- application

We could abstract this even further by creating separate modules for clock configuration and pin muxing but there is no need at this point. Its good practice to modularize your code, but only to a certain extent. Abstract it too much without justification, and you made more work for yourself and more complicated code for no good reason. Try to find a middle ground that satisfies both your time and effort constraints but still produces nice clean code (we will look at what that means throughout the tutorials). Remember, you can always refactor later, so it doesn’t have to be 100% the best code ever the first time around.

So lets take a look at what new header files and APIs we will have to introduce to modularize our code as described above. Based on the code we have written already, we can separate the APIs defined into new source and header files. The first API to look at is the watchdog. There are three watchdog functions in our code at this point. Since they will no longer be static, we can remove their static declarations from main.c and move them into a new file called watchdog.h which will be located in the include directory. We will also remove the leading underscore to indicate that they are public functions. As a note for good coding practice, it is easiest for someone reading your code when the prefix of your functions match the filename of the header containing them, for example, watchdog_enable would be in watchdog.h. Yes the IDEs can find the function for you and you don’t have to search for anything, but there is no reason to mismatch naming conventions. So now, our watchdog.h file will look like this:

#ifndef __WATCHDOG_H__ #define __WATCHDOG_H__ /** * brief Disable the watchdog timer module */ void watchdog_disable(void); /** * brief Enable the watchdog timer module * The watchdog timeout is set to an interval of 32768 cycles */ void watchdog_enable(void); /** * brief Pet the watchdog */ void watchdog_pet(void); #endif /* __WATCHDOG_H__ */

Notice how when we create public functions that are defined in header files we will always document them. This is considered good practice and should be done consistently. These are extremely simple functions so not much documentation is required. Obviously a more complex function with parameters and a return code will have more information, but try to keep it as simple as possible for the reader without revealing too much about the internal workings of the function. This also leads to the concept of not changing your APIs. Changing the API should be avoided, as well as changing any behaviour with the external world. The expected behaviour should be well defined, although the implementation can be changed as required. Therefore your comments will have minimal changes as well.

Now, we need to cut the function definitions out from main.c and move them to a new file called watchdog.c under the src/ directory. Remember to change the functions to match those in the header file. We will also need need to include watchdog.h as well as msp430.h to access the register definitions.

#include "watchdog.h"

#include <msp430.h>;

/**

* brief Disable the watchdog timer module

*/

void watchdog_disable(void)

{

/* Hold the watchdog */

WDTCTL = WDTPW + WDTHOLD;

}

/**

* brief Enable the watchdog timer module

* The watchdog timeout is set to an interval of 32768 cycles

*/

void watchdog_enable(void)

{

/* Read the watchdog interrupt flag */

if (IFG1 & WDTIFG) {

/* Clear if set */

IFG1 &= ~WDTIFG;

}

watchdog_pet();

}

/**

* brief Pet the watchdog

*/

void watchdog_pet(void)

{

/**

* Enable the watchdog with following settings

* - sourced by ACLK

* - interval = 32786 / 12000 = 2.73s

*/

WDTCTL = WDTPW + (WDTSSEL | WDTCNTCL);

}

Another very important concept is knowing when and where to include header files. Not knowing this can result in extremely poorly written and impossible to maintain code. The rules for this are very simple:

- A public header file should include all header files required to use it. This means, if you have defined a structure in header file foo.h and it is passed as an argument for one of the functions bar.h, bar.h must include foo.h. You don’t want the caller of this API to have to know what other include files to include. The reason for this being, if the caller must include two header files to use one API, the order matters. In this case, foo.h must be included before bar.h. If it just so happens the caller has already included foo.h for some other reason, they may not even notice it is required. This is a maintenance nightmare for anyone using your code.

- A public header file should include only what is required. Those giant monolithic header files are impossible to maintain. Users of your APIs shouldn’t have to care about your implementation. Don’t include files or types that make this information public because when you change it, the calling code will have to be updated as well. Include header files and private types required in the implementation only in the source file.This makes the code portable and modular. Updating and improving your implementation is great, forcing to callers to update their code because of changing in some structure, not so much.

- The last point is no never include header files recursively, meaning foo.h includes bar.h and vice versa. This will again result in a maintenance nightmare.

Not too complicated right? The goal is to make these rules second nature, so keep practising them every single time you write a header file. And if you catch me not following my own rules, please feel free to send me a nasty email telling me about it 🙂

Back to the code, we also want to separate the configuration data (TLV) verification. Again, a new header file should be created in the include/ directory called tlv.h. We remove the declaration of the _verify_cal_data function from main.c, move it to tlv.h, and rename it to tlv_verify.

#ifndef __TLV_H__ #define __TLV_H__ /** * brief Verify the TLV data in flash * return 0 if TLV data is valid, -1 otherwise */ int tlv_verify(void); #endif /* __TLV_H__ */

Now create the matching source file tlv.c in the src/ directory and move the implementation from main.c into this file. We will also need to move the helper function _calculate_checsum into our new file. It will remain static as it is private to this file.

#include "tlv.h"

#include <msp430.h>

#include <stdint.h>

#include <stddef.h>

static uint16_t _calculate_checksum(uint16_t *address, size_t len);

/**

* brief Verify the TLV data in flash

* return 0 if TLV data is valid, -1 otherwise

*/

int tlv_verify(void)

{

return (TLV_CHECKSUM + _calculate_checksum((uint16_t *) 0x10c2, 62));

}

static uint16_t _calculate_checksum(uint16_t *data, size_t len)

{

uint16_t crc = 0;

len = len / 2;

while (len-- > 0) {

crc ^= *(data++);

}

return crc;

}

The last header file will be board.h, which will require a new API to initialize and configure the device for the board specific application. Our prototype will look like this:

#ifndef __BOARD_H__ #define __BOARD_H__ /** * brief Initialize all board dependant functionality * return 0 on success, -1 otherwise */ int board_init(void); #endif /* __BOARD_H__ */

Now we can create board.c in the src/ directory, and implement the API. We will cut everything from the beginning of main until watchdog_enable (inclusive) and paste it into our new function. Now we need to clean up the body of this function to use our new APIs. We need to include board watchdog.h and tlv.h as well as fix up any of the function calls to reflect our refactoring effort.

#include "board.h"

#include "watchdog.h"

#include "tlv.h"

#include <msp430.h>

/**

* brief Initialize all board dependant functionality

* return 0 on success, -1 otherwise

*/

int board_init(void)

{

watchdog_disable();

if (tlv_verify() != 0) {

/* Calibration data is corrupted...hang */

while(1);

}

/* Configure the clock module - MCLK = 1MHz */

DCOCTL = 0;

BCSCTL1 = CALBC1_1MHZ;

DCOCTL = CALDCO_1MHZ;

/* Configure ACLK to to be sourced from VLO = ~12KHz */

BCSCTL3 |= LFXT1S_2;

/* Configure P1.0 as digital output */

P1SEL &= ~0x01;

P1DIR |= 0x01;

/* Set P1.0 output high */

P1OUT |= 0x01;

/* Configure P1.3 to digital input */

P1SEL &= ~0x08;

P1SEL2 &= ~0x08;

P1DIR &= ~0x08;

/* Pull-up required for rev 1.5 Launchpad */

P1REN |= 0x08;

P1OUT |= 0x08;

/* Set P1.3 interrupt to active-low edge */

P1IES |= 0x08;

/* Enable interrupt on P1.3 */

P1IE |= 0x08;

/* Global interrupt enable */

__enable_interrupt();

watchdog_enable();

return 0;

}

Finally, we need to clean up our main function to call board_init and then update watchdog_pet API.

int main(int argc, char *argv[])

{

(void) argc;

(void) argv;

if (board_init() == 0) {

/* Start blinking the LED */

while (1) {

watchdog_pet();

if (_blink_enable != 0) {

/* Wait for LED_DELAY_CYCLES cycles */

__delay_cycles(LED_DELAY_CYCLES);

/* Toggle P1.0 output */

P1OUT ^= 0x01;

}

}

}

return 0;

}

Now isn’t that much cleaner and easier to read? Is it perfect, no? But is it better than before, definately. The idea behind refactoring in embedded systems is to make the application level code as agnostic as possible to the actual device it is running on. So if we were going to take this code and run it on a Atmel or PIC, all we should have to change is the implementation of the hardware specific APIs.Obviously in our main this is not the case yet. We have register access to GPIO pins and an ISR, both of which are not portable code. We could create a GPIO API and implement all GPIO accesses there, but for now there is no need. Similarly, we could make an interrupt API which allows the caller to attach an ISR function to any ISR, as well as enable or disable them. This type of abstraction is called hardware abstraction and the code / APIs that implement it are called the hardware abstraction layer (HAL).

Makefiles

Now that the code is nicely refactored, on to the basics of make. The script invoked by make is called a makefile. To create a makefile, you simply create a new text file which is named ‘makefile’ (or ‘Makefile’). Before we begin writing the makefile, lets discuss the basic syntax. For more information you can always reference the GNU make documentation found here.

The basic building block of makefiles are rules. Rules define how an output is generated given a certain set of prerequisites. The output is called a target and make automatically determines if the prerequisites for a given target have been satisfied. If any of the prerequisites are newer than the target, then the instructions – called a recipe – must be executed. The syntax of a rule is as follows:

<target> : <prerequisites>

<recipe>

Note that in makefiles whitespace does matter. The recipe must be tab-indented from the target line. Most editors will automatically take care of this for you, but if your editor replaces tabs with spaces for makefiles, make will reject the syntax and throw an error. There should be only one target defined per rule, but any number of prerequisites. For example, say we want to compile main.c to output main.o, the rule might look like this:

main.o: main.c

<recipe>

If make is invoked and the target main.o is newer than main.c, no action is required. Otherwise, the recipe shall be invoked. What if main.c includes a header files called config.h, how should this rule look then?

main.o: main.c main.h

<recipe>

It is important to include all the dependencies of the file as prerequisites, otherwise make will not be able to do its job correctly. If the header file is not included in the list of prerequisites, it can cause the build not to function as expected, and then ‘mysteriously’ start functioning only once main.c is actually changed. This becomes even more important when multiple source files reference the same header. If only one of the objects is rebuilt as a result of a change in the header, the executable may have mismatched data types, enumerations etc… It is very important to have a robust build system because there is nothing more frustrating than trying to debug by making tons of changes that seem to have no effect only to find out that it was the fault of your build system.

As you can imagine, in a project which has many source files and many dependences, creating rules or each one manually would be tedious and most certainly lead to errors. For this reason, the target and prerequisites can be defined using patterns. A common example would be to take our rule from above, and apply it to all C files.

%.o: %.c

<recipe>

This rule means that for each C file, create an object file (or file ending in .o to be specific) of the equivalent name using the recipe. Here we do not include the header file because it would be nonsensical for all the headers to be included as prerequisites for every source file. Instead, there is the concept of creating dependencies which we look at later.

Makefiles have variables similar to any other programming or scripting language. Variables in make are always interpreted as strings and are case-sensitive. The simplest way to assign a variable is by using the assignment operator ‘=’, for example

VARIABLE = value

Note, variables are usually defined using capital letters as it helps differentiate from any command line functions, arguments or filenames. Also notice how although the variable is a string, the value is not in quotes. You do not have to put the value in quotes in makefiles so long as there are no whitespaces. If there are you must use double quotes otherwise the value will be interpreted incorrectly. To reference the variable in the makefile, it must be preceded with a dollar sign ($) and enclosed in brackets, for example

<target> : $(VARIABLE)

@echo $(VARIABLE)

Would print out the value assigned to the variable. Putting the ‘@’ sign in front of the echo command tells make not to print out the command it is executing, only the output of the command. You may be wondering how it is that the shell command ‘echo’ can be invoked directly from make. Typically invoking a shell command requires using a special syntax but make has some implicit rules for command line utilities. ‘CC’ is another example of an implicit rule whose default value is ‘cc’ which is gcc. However, this is the host gcc, not our MSP430 cross compiler so this variable will have to be overloaded.

The value assigned to a variable need not be a constant string either. One of the most powerful uses of variables is that they can be interpreted as shell commands or makefile functions. These variables are often called macros. By using the assignment operator, it tells make that the variable should be expanded every time it is used. For example, say we want to find all the C source files in the current directory and add assign them to a variable.

SRCS=$(wildcard *.c)

Here ‘wildcard’ is a make function which searches the current directory for anything that matches the pattern *.c. When we have defined a macro like this where SRCS may be used in more than one place in the makefile, it is probably ideal not to re-evaluate the expression every time it is referenced. To do so, we must use another type of assignment operator, the simply expanded assignment operator ‘:=’.

SRCS:=$(wildcard *.c)

For most assignments, it is recommended to use the simply expanded variables unless you know that the macro should be expanded each time it is referenced.

The last type of assignment operator is the conditional variable assignment, denoted by ‘?=’. This means that the variable will only be assigned a value if it is currently not defined. This can be useful when a variable may be exported in the environment of the shell and the makefile needs that variable but should not overwrite it if it defined. This means that if you have exported a variable from the shell (as we did in lesson 2), that variable is now in the environment and make will read the environment and have access to those variables when executing the makefile. One example where this would be used is to define the path to the toolchain. I like to install all my toolchains to the /opt directory, but some people like to install them to the /home directory. To account for this, I can assign the variable as follows:

TOOLCHAIN_ROOT?=~/msp430-toolchain

That makes all the people who like the toolchain in their home directory happy. But what about me with my toolchain under /opt? I simply add an environment variable to my system (for help – see section 4) which is equivalent to a persistent version of the export command. Whenever I compile, make will see that TOOLCHAIN_ROOT is defined in my environment and used it as is.

Rules can be invoked automatically by specifying macros that substitute the prerequisites for the target. One of the most common examples of this is using a macro to invoke the compile rule. To do this, we can use a substitution command which will convert all .c files in SRCS to .o files, and store them in a new variable OBJS.

OBJS:=$(SRCS:.c=.o)

This is a shorthand for make’s pattern substitution (patsubst) command. If there is a rule defined that matches this substitution, make will invoke it automatically. The recipe is invoked once for each file, so for every source file the compile recipe will be invoked and object file will be generated with the extension .o. Pattern substitution, as well as the many other string substitution functions in make, can also be used to strip paths, add prefixed or suffixes, filter, sort and more. They may or may not invoke rules depending on the content of your makefile.

Rules and variables are the foundations of makefiles. There is much more but this short introduction is enough to get us started. As we write our makefile, you will be introduced to a few new concepts.

Writing our Makefile

We made a whole bunch of changes to our code and now compiling from the command line using gcc directly is not really feasible. We need to write our first makefile using these principles from earlier. If you have not yet downloaded the tagged code for this tutorial, now would be the time. We are going to go through the new makefile line-by-line to understand exactly how to write one. The makefile is typically placed in the project root directory, so open it up with a text editor. The first line starts with a hash (#), which is the symbol used to denote comments in makefiles. Next we start defining the variables, starting with TOOLCHAIN_ROOT.

TOOLCHAIN_ROOT?=/opt/msp430-toolchain

Using the conditional variable assignment, it is assigned the directory of the toolchain. It is best not to end paths with a slash ‘/’ even it is a directory, because when you go to use the variable, you will put another slash and end up with double slashes everywhere. It won’t break anything usually, but its just cosmetic. Next we want to create a variable for the compiler. The variable CC is implicit in make and defaults to the host compiler. Since we need the MSP430 cross-compiler, the variable can be reassigned to the executable.

CC:=$(TOOLCHAIN_ROOT)/bin/msp430-gcc

Often the other executable inside the toolchain’s bin directory are defined by the makefile as well if they are required. For example, if we were to use the standalone linker ld, we would create a new variable LD and point it to the linker executable. The list of implicit variables can be found in the GNU make documentation.

Next the directories are defined.

BUILD_DIR=build OBJ_DIR=$(BUILD_DIR)/obj BIN_DIR=$(BUILD_DIR)/bin SRC_DIR=src INC_DIR=include

We have already create two directories, src and include, so SRC_DIR and INC_DIR point to those respectively. The build directory is where all the object files will go and will be created by the build itself. There will be two subdirectories, obj and bin. The obj directory is where the individually compiled object files will go, while he bin directory is for the final executable output. Once the directories are defined, the following commands are executed:

ifneq ($(BUILD_DIR),) $(shell [ -d $(BUILD_DIR) ] || mkdir -p $(BUILD_DIR)) $(shell [ -d $(OBJ_DIR) ] || mkdir -p $(OBJ_DIR)) $(shell [ -d $(BIN_DIR) ] || mkdir -p $(BIN_DIR)) endif

The ifneq directive is similar to C, but since everything is a string, it compares BUILD_DIR to nothing which is the equivalent to an empty string. Then shell commands are executed to check if the directory exists and if not it will be created. The square brackets are the shell equivalent of a conditional ‘if’ statement and ‘-d’ checks for a directory with the name of the string following. Similar to C, or’ing conditions is represented by ‘||’. If the directory directory exists, the statement is true, so rest will not be executed. Otherwise, the mkdir command will be invoked and the directory will be created. The shell command is repeated for each subdirectory of build.

Next the source files are saved to the SRCS variable.

SRCS:=$(wildcard $(SRC_DIR)/*.c)

Using the wildcard functions, make will search all of SRC_DIR for any files that match the pattern *.c, which will resolve all of our C source files. Next comes the object files. As we discussed earlier, path substitution can be used to invoke a rule. The assignment

OBJS:=$(patsubst %.c,$(OBJ_DIR)/%.o,$(notdir $(SRCS)))

is the long hand version of what we discussed above but with some differences. First, the patsubst command is written explicitly. Then the object file name must be prepended with the OBJ_DIR path. This tells make that for a given source file, the respective object file should be generated under build/obj. We must strip the path of the source files using the notdir function. Therefore, src/main.c would become main.c. We need to do this because we do not want prepend the OBJ_DIR to the full source file path, i.e. build/src/main.c. Some build systems do this, and it is fine, but I prefer to have all the object files in one directory. One caveat of putting all the object files in one directory is that if two files have the same name, the object file will get overwritten by the last file to compile. This is not such a bad thing however, because it would be confusing to have two files with the same name in one project. The rule that this substitution invokes is defined later in the makefile.

Next the output file, ELF is assigned.

ELF:=$(BIN_DIR)/app.out

This is just a simple way of defining the name of location of the final executable output file. We place it in the bin directory (although it’s technically not a binary). This file is the linked output of all the individual object files that exist in build/obj. To understand how this works we need to look at the next two variables, CFLAGS and LDFLAGS. These two variables are common practice and represent the compile flags and linker flags respectively. Lets take a look at the compiler flags.

CFLAGS:= -mmcu=msp430g2553 -c -Wall -Werror -Wextra -Wshadow -std=gnu90 -Wpedantic -MMD -I$(INC_DIR)

The first flag in here is one we have been using all along to tell the compiler which device we are compiling for. The ‘-c’ tells gcc to stop at the compilation step and therefore the linker will not be invoked. The output will still be an object file containing the machine code, but the addresses to external symbols (symbols defined in other objects files) will not yet be resolved. Therefore you cannot load and execute this object file, as it is only a part of the executable. The -Wall -Werror -Wextra -Wshadow -std=gnu90 -Wpedantic compiler flags tell the compiler to enable certain warnings and errors to help make the code robust. Enabling all these flags makes the compiler very sensitive to ‘lazy’ coding. Wall for example turns all the standard compiler warnings, while Wextra turns on some more strict ones. You can find out more about the exact checkers that are being enabled by looking at the gcc man page. Werror turns all warnings into errors. For non-syntactical errors, the compiler may complain using warnings rather than errors, which means the output will still be generated but with potential issues. Often leaving these warnings uncorrected can result in undesired behaviour and are difficult to track down because the warnings are only issued when that specific file is compiled. Once the file is compiled, gcc will no longer complain and its easy to forget. By forcing all warnings to be errors, you must fix everything up front.

In C, there is nothing stopping a source file from containing a global variable foo, and then using the same name foo, for an argument passed into one of the functions. In the function, the compiler must decide which foo to use, which is not right. The compiler cannot possibly know which variable you are referring to, so enabling Wshadow will throw an error if shadow variables are encountered, rather than choosing one.

Finally, -std and -Wpedantic tell the compiler what standard to use, and what types of extensions are acceptable. The gnu90 standard is the GNU version of the ISO C90 standard with GNU extensions and implicit functions enabled. I would have preferred to use C90 (no GNU extensions – also called ansi) but the msp430.h header and intrinsic functions do not play nice with this. Wpedantic tells the compiler to accept only strict ISO C conformance and not accept non-standard extensions other than those that are defined with prepended and appended with double underscores (think __attributes__). So together these two parameters mean no C++ style comment (“//”), variables must be defined at the beginning of the scope (i.e. right after an opening brace) amongst other things.

The -MMD flag tells the compiler to output make-compatible dependency data. Instead of writing the required header files explicitly as we did earlier, gcc can automatically determine the prerequisites and store them in a dependency file. When we compile the code, make will check not only the status of the file, but also of all the prerequisites stored in its respective dependency file. If you look at a dependency file (which have the extension .d as we will see later), it is really just a list of the header files included in the source file. Finally, the -I argument tells gcc in what directory(ies) to search for include files. In our case this is the variable INC_DIR which resolves to the the include/ directory.

Under the linker flags variable LDFLAGs, we only have to pass the device type argument. The default linker arguments are sufficient at this time.

LDFLAGS:= -mmcu=msp430g2553

Next there is the DEPS variable, which stands for dependencies.

DEPS:=$(OBJS:.o=.d)

As mentioned earlier, the dependency rule takes the object file and creates a matching dependency file under the build/obj directory. This macro is the same as the shorthand version of patsubst which we saw earlier for OBJS. The rule to generate the dependency file is implicit.

Finally the rules. Rules typically result in the output of a file (the target), however sometimes we need rules to do other things. These targets are called PHONY, and should be declared as such. The target all is an example of a PHONY.

.PHONY: all

all: $(ELF)

We don’t want a file named ‘all’ to be generated, but it is still a target which should be executed. The target all means perform the full build. The prerequisite of the target all is the the target ELF, which is the output file. This means in order for ‘make all’ to succeed, the output binary must have been generated successfully and be up to date. The ELF target has its own rule below:

$(ELF) : $(OBJS)

$(CC) $(LDFLAGS) $^ -o $@

It’s prerequisites are all the object files that have been created and stored in the variable OBJS. Now the recipe for this rule brings us back to the linker issue. The compiled object files must be linked into the final executable ELF so that all addresses are resolved. To do this we can use gcc (CC) which will automatically invoke the linker with the correct default arguments. All we have to do is pass the LDFLAGS to CC, and tell it what the input files are and what the output should be. The recipe for the link command introduced a new concept called automatic variables. Automatic variables can be used to represent the components of a rule. $@ refers to the target, while $^ refers to all the prerequisites. It is convenient way to write generic rules without explicitly listing the target and prerequisites. The equivalent for this recipe without using the automatic variables would be

$(ELF) : $(OBJS)

$(CC) $(LDFLAGS) $(OBJS) -o $(ELF)

In order to meet the prerequisites of OBJS for the ELF target, the individual sources must be compiled. This is where the path substitution comes in. When make tries to resolve the prerequisite, it will see the path substitution in the assignment of the OBJ variable and invoke the final rule:

$(OBJ_DIR)/%.o : $(SRC_DIR)/%.c

$(CC) $(CFLAGS) $< -o $@

This rule takes all the source files stored in the SRCS variable and compiles them with the CFLAGS arguments. In this case, the rule is invoked for each file, so the target is each object file and the prerequisite is the matching source file. This leads us to another automatic variable $< which is the equivalent to taking the first prerequisite, rather than all of them as $^ does. The rule must match our path substitution, so thats why the target must be prepended with the OBJ_DIR variable and the prerequisites with the SRC_DIR variable.

The last rule is the clean rule, which is another PHONY target. This rule simply deletes the entire build directory, so there are no objects or dependencies stored. If you ever want to do a full rebuild, you would perform a make clean and then a make all, or in shorthand on the command line:

make clean && make all

The last line in the makefiles is the include directive for the dependency files. In make the include directive can be used to include other files as we do in C. The preceding dash before include tells make not to throw an error if the files do not exist. This would be the case in a clean build, since the dependencies have yet to be generated. Once they are, make will use them to determine what to rebuild. Open up one of the dependency files to see what it contains. – take main.d for example:

build/obj/main.o: src/main.c include/board.h include/watchdog.h

This is really just another rule the compiler has generated stating that main.o has the prerequisites main.c, board.h, and watchdog.h. The rule will automatically be invoked by make when main.o is to be generated. System header files (ie libc) are not included. The include directive must be placed at the end of the file so as not to supersede the default target – all. If you place the include before the target all, the first rule invoked with be the dependencies, and you will start to see weird behaviour when invoking make without explicit targets as arguments. By playing including the dependency rules at the end, invoking ‘make’ and ‘make all’ from the command line are now synonymous. When we execute make from the command line, this is what the output should look like.

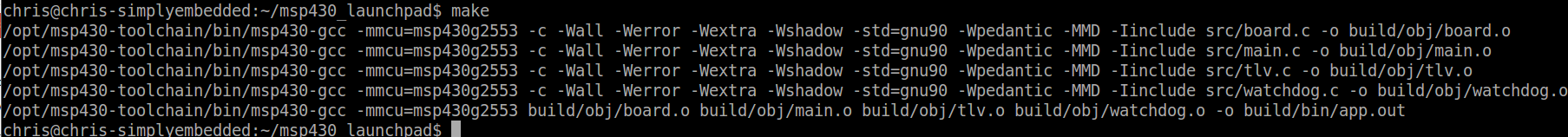

From the output you can see exactly what we have discussed. Each source file is compiled using the arguments defined by CFLAGS into an object file and stored under build/obj. Then, all these object files are linked together to create the final executable app.out. This is the file that is loaded to the MSP430. The functionality is exactly the same as the previous lesson. Some homework for those of you who are interested: create a new rule in the makefile called ‘download’ which will flash the output to the MSP430 automatically using mspdebug. The answer will be available in the next lesson.

This blog is, by far, the best educational treasure on MCUs – and certainly a landmark for the MSP430 – on the Internet. The organization and clarity of your teaching is beyond outstanding. Keep going regardless of the number of followers, you are highly appreciated. Thank you and I owe you a pizza and a beer if you’re ever in Rome, Italy.

Best

Stefano

Thanks Stefano! It’s great your finding the tutorials helpful. Your comments are very encouraging and much appreciated. I would love to come visit Rome (and all of Italy), its definitely on my list. Its looking like a few years out but I’ll let you know 😉 Cheers!

Hi! I’ve learned a lot from these tutorials. I’m going back on some of my projects and rewriting them, splitting up files, adding abstraction. I’ve noticed that now that I’m compiling using makefiles, my .out and .hex files have gotten larger compared to throwing everything in one file and compiling without make. 5.6K vs 8.5K .hex and 12K vs 37K .out (or .elf). Is this to be expected?

I’m using msp430-objcopy to make the .hex files.

Hi Justin,

Sorry it has taken me so long to get back to you on this question. I am in the middle of moving and starting a new job so I am very much behind.

Now, on to your comment. That is a great question. The short answer is – yes – it is expected to a certain extent. It is a balance between writing good, readable, reusable, modular code, and code size, performance etc… If you put all your functionality in one giant function, your code will definately be smaller, and faster. This is because calling functions has overhead. The compiler must does several things which we don’t really see in C. First the function arguments have to be loaded into the registers. The more arguments you function takes, the more operations are required to load that data. Then when the function is called, the next instruction must be stored on the stack so that when it returns, it knows where it left off. The stack pointer needs to be incremented and then most likely additional stack needs to be added for local variables. This all then has to be undone when the function completes. All this adds overhead that adds to your code size. You have to decide if the trade off is acceptable for your project. The way I look it at is: first write the code so it good quality, readable, modular, etc… Start with good programming practices basically. Then if a particular piece of code is too big or not performing well enough, it can be optimized. There are many ways to optimize code without necessarily making it unreadable. Compiler optimizations are a topic we haven’t covered yet, but if you change -O0 to -O2 or -Os you may notice a significant improvement. Inline functions are also useful. If you find that you have a small function being used over and over again, you could use the static keyword to suggest to the compiler that it may be best to inline the function (ie no function overhead). The compiler may do this automatically, and it may choose to ignore your suggestion, so it doesn’t always work. Now, you can ‘force’ the compiler to inline your function (and many people may scold me for even suggesting this) by not writing a function at all, but instead using a macro. Simple one or two liners can be replaced by a macro and the precompiler always follows this. That being said, it is often considered ‘poor coding practice’ and not accepted by many coding standard (MISRA) to use function-like macros. But those are some suggestions. Hope this helps clarify.

Hi there,

I have tried to use your makefile for another msp430 project. However, my project is used msp430f2619 instead of msp430g2553. I changed the -mmcu value to my mcu and then when compiling I got this error.

/opt/msp430-toolchain/bin/msp430-gcc -mmcu=msp430f2619 build/obj/BCSplus.o build/obj/spi_master.o build/obj/i2c_slave.o build/obj/system.o build/obj/gpio.o build/obj/main.o build/obj/ad7193.o -o build/bin/app.out

/opt/msp430-toolchain/lib/gcc/msp430-none-elf/5.3.0/../../../../msp430-none-elf/bin/ld: cannot open linker script file msp430f2619.ld: No such file or directory

collect2: error: ld returned 1 exit status

makefile:46: recipe for target ‘build/bin/app.out’ failed

make: *** [build/bin/app.out] Error 1

It seems that the linker script is not located in the default directory being searched. Can you do a search in the toolchain directory for the *.ld files and make sure that path is added to LDFLAGS in the makefile.